Overview

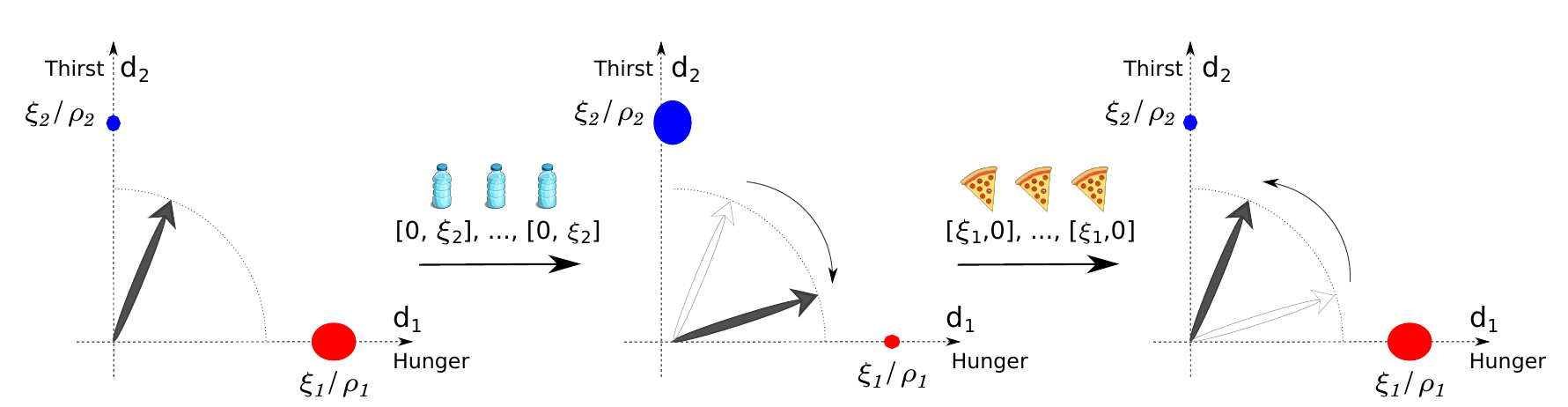

We present a motivational system for an agent undergoing

reinforcement learning (RL), which enables it to balance

multiple drives, each of which is satiated by different types of

stimuli. Inspired by drive reduction theory, it uses

Minor Component Analysis (MCA) to model the agent's internal drive

state, and modulates incoming stimuli on the basis of how

strongly the stimulus satiates the currently active

drive. The agent's dynamic policy continually changes through

least-squares temporal difference updates. It automatically seeks

stimuli that first satiate the most active internal drives, then the

next most active drives, etc. We prove that our algorithm is stable

under certain conditions. Experimental results illustrate its

behavior.

Videos

Top

->

Software

Python (soon)